MiRo and Machine Vision¶

Introduction¶

In this tutorial, you will:

- learn about the various Machine Vision blocks in MiRoCODE

- observe how applying different image processing techniques can lead to different effects

- acquire an understanding of the most common algorithms and how to fine-tune their parameters

Important note¶

For this session you are required to use a new block group, called Vision processing (currently in beta).

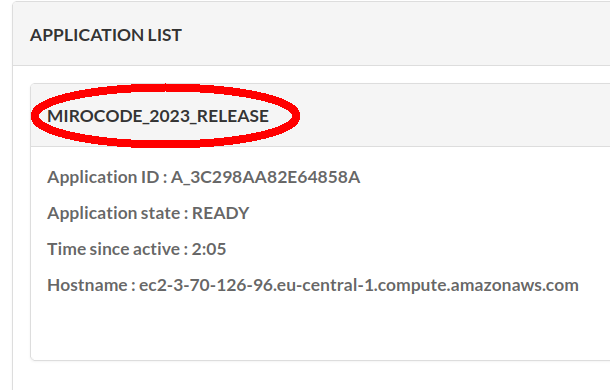

Make sure to select the MIROcode 2023 Beta image before starting your MiRoCode instance.

You can check which one is running by looking at the instance name in the Application List.

Overview of the Vision System in MiRoCODE¶

MiRo has two cameras mounted in its eyes. Adhering to the biomimetic principles, these cameras are positioned to give a sizeable overlap in their fields of view. This configuration enables MiRo to have stereoscopic vision within the forward cone, which is useful for orienting towards objects and estimating distances.

By default, the cameras run at 640x360 pixel resolution at 15 frames per second.

The video outputs are provided in the bottom right corner of the simulator window.

Tip

To obtain the best possible view of the cameras, it is advisable to enlarge the right part of the MiRoCode interface screen and swap the cameras with the simulator view.

As you can see the on the figure above, each image has a frame of reference drawn onto it, to help locate parts of the image.

The horizontal axis, X, goes from -0.5 to 0.5; while the vertical axis, Y, goes from -0.28 to 0.28.

The units here are dimensionless, normalised "frame widths".

Such choice preserves the aspect ratio, which makes it more convenient to do in-frame geometric computations.

Vision block group¶

The Vision block group is available in the standard MiRoCode 2021 release.

At the moment there are 5 Vision blocks here that enable you to do both low-level operations, such as getting average colour of pixels in a region, and high-level operations, like identifying shapes and objects.

Info

Note that all the blocks in Vision group have a left connector, meaning they are meant to be plugged into other blocks that allow input, e.g. print variable, compare or assign to.

Each Vision block has a few settings, allowing to:

- specify which camera to use

- specify which value to return in the output

- (for Object Detection blocks) customise the colour or shape of the objects to look for

Once a Vision block is in active use, the camera images will show additional information (annotations) based on block type. The example below shows annotations for a successful AprilTag detection.

Vision processing block group¶

A new block group added in the MIROcode 2023 Beta image is the Vision processing.

Again, make sure to select the correct release to work with these blocks before starting your MiRoCode instance.

Info

The main purpose of these blocks is to show how the various vision processing algorithms work in practice.

Apart from changing the camera outputs, they do not feed into your Blockly code and thus cannot control code execution.

There are 12 blocks in this group, with the main block that enables the vision processing on the top, and the rest representing the various algorithms and techniques one can apply to an image.

In order to get started with these, the Process Left/Right camera images block needs to be placed somewhere in your code.

Other vision processing blocks can then be placed into the two placeholders, in various combinations and independently for left and right cameras.

Warning

Please note that image processing blocks will not work outside of the main Process images block. This includes functions.

To see the resulting images, simply click on  .

.

The screenshot below of an example program shows a typical usage of this block group. You are advised not to move the MiRo when running these blocks, as the frames might change quicker than they are processed.

Bug

The processed images can take a long time to show up.

To force a redraw, simply switch to another tab in your browser, wait a bit and then switch back to the MiRoCode tab.

You can use CTRL + TAB in Firefox to do this quickly.

Bug

Switching back from processed images to the original images is known to break the visualisation.

If this happens, refresh the MiRoCODE page in your browser.

Better yet, close the MiRoCODE tab and open it again from the CQRHUB management page with the Launch command.

You can find the detailed description of the Vision Processing blocks on the Blocks Overview page.

Developing your own code¶

Now that you know the basics, it's time to see how some of these can be applied. In your teams, see if you can do the following tasks.

Task 1. Highlight of the day¶

Using the cameras on the physical MiRo, see if you can use some form of segmentation to highlight an object in your surroundings. Any object will do, as long as the object is shown on a black (or white!) background. Once you're happy with the result, take a screenshot of the MiRoCODE page with the processed image(s) of the object on the right, and your code on the left.

Task 2. Gallery¶

Load the gallery world in MiRoCloud.

Here, MiRo is admiring a photo exhibition (courtesy to Unsplash and the respective authors!) There are 6 photographs in total, and they come organised in two triptychs. You can rotate MiRo and move it between the two tables to get a best view of each photo.

Info

You won't see the pictures directly in the simulated world, but you can see them through MiRo's cameras.

Write a program using the vision processing blocks for each of the following:

- For a given photo, have the left camera detecting vertical edges and the right camera detecting horizontal edges.

- Highlight the biggest round object in a given photo.

- Highlight the most prominent features in a photo. Ideally, you should use two algorithms for feature detection/enhancement and compare them using the left and right cameras.

- [Difficult] A silhouette (contour) of the most distinct object in a photo.

You can choose a different photo for each of the subtasks above (in fact, it will probably be easier if you do).

Don't forget to take screenshots of the code and the processed images at each stage.